ABOUT ME

Sandeep Yerra

MS Data Analytics, Boston University

MBA, IIM Indore

Btech CS, IIIT Hyderabad

I'm Sandeep Yerra, a Senior Data Scientist with a rich background in predictive modeling, AI, and machine learning. My career spans significant contributions to Business Operations, Derivative Trading and Sales in large-scale organizations. Skilled in Python, R, AWS, and GCP and the ML ecosystem, I excel in turning data into actionable insights. My academic journey includes an MS in Applied Data Analytics from Boston University, complementing my practical data science expertise. At the core, I am driven by a passion for data-driven decision making and innovation.

Outside of my professional life, I cherish time spent with my family, especially my wife and daughter, exploring new places and cultures together. I'm passionate about sports, often playing football, cricket, and badminton. These activities not only fuel my competitive spirit but also provide cherished moments with my loved ones. Music also plays a significant role in my life, as I unwind by playing the guitar. I am dedicated to lifelong learning, consistently seeking new skills and knowledge to grow both personally and professionally.

For additional details about my academic background, please refer to my resume.

SELECTED PROJECTS

Quantization of Deep Learning models for Human Activity Recognition [Github]

- This project focuses on the quantization of deep learning models to enhance the performance and efficiency of Human Activity Recognition systems. Working under the guidance of Prof. Reza Rawassizadeh at Boston University, we explored various quantization techniques to optimize model performance

- Techniques implemented using Pytorch, Tensorflow and Coreml:

- Dynamic Quantization

- Quantization Aware Training

- Static Quantization

- Structured and Unstructured Pruning

- The project successfully implemented the above techniques, resulting in models that were significantly more efficient without compromising on accuracy. We used the WISDM dataset for training and testing, applying various models like MLP, CNN, LSTM, GRU, and Transformer. The models were evaluated based on size, accuracy, CPU usage, and inference time

Gaussian Splatting

- Utilized Gaussian splatting for Rendering custom 2-d images into a 3-d scene.

- 3-d Self Portrait scene of approximately 280 2-d images

- 3-D reconstruction of Drone captured 2-D Images using Gaussian Splatting

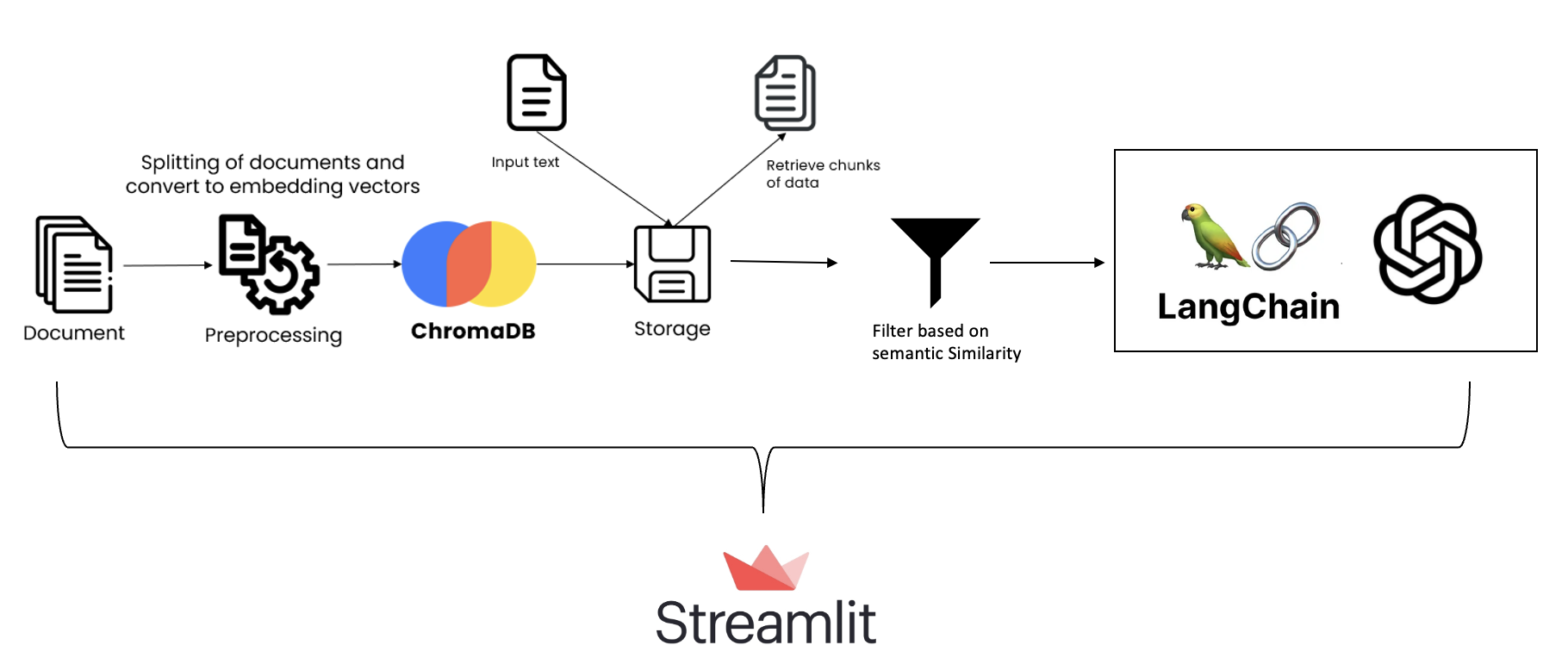

Context Limitation of LLM [Github]

- Created a LLM based chatbot in Python for custom PDF Q&A, utilizing RAG, Streamlit, Langchain and Chroma DB.

- Achieved context limitation through experiments on similarity matching using Sentence Transformers and Autoencoders.

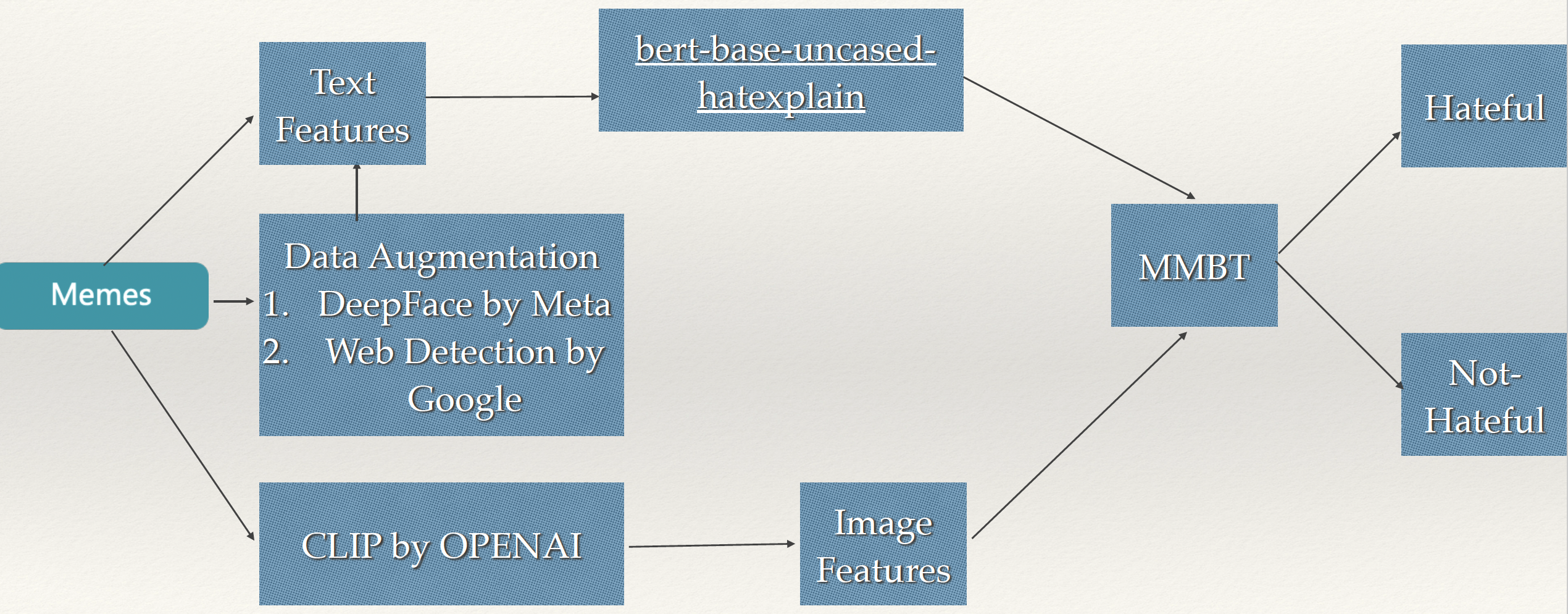

Multimodal Hateful Meme Detection [Github]

- Experimented with VisualBert, CLIP, and Huggingface MMBT, with Google Entity Detection and Meta Deepface.

- Attained a standout 78% accuracy in hateful meme detection using a multi modal architecture using Pytorch.

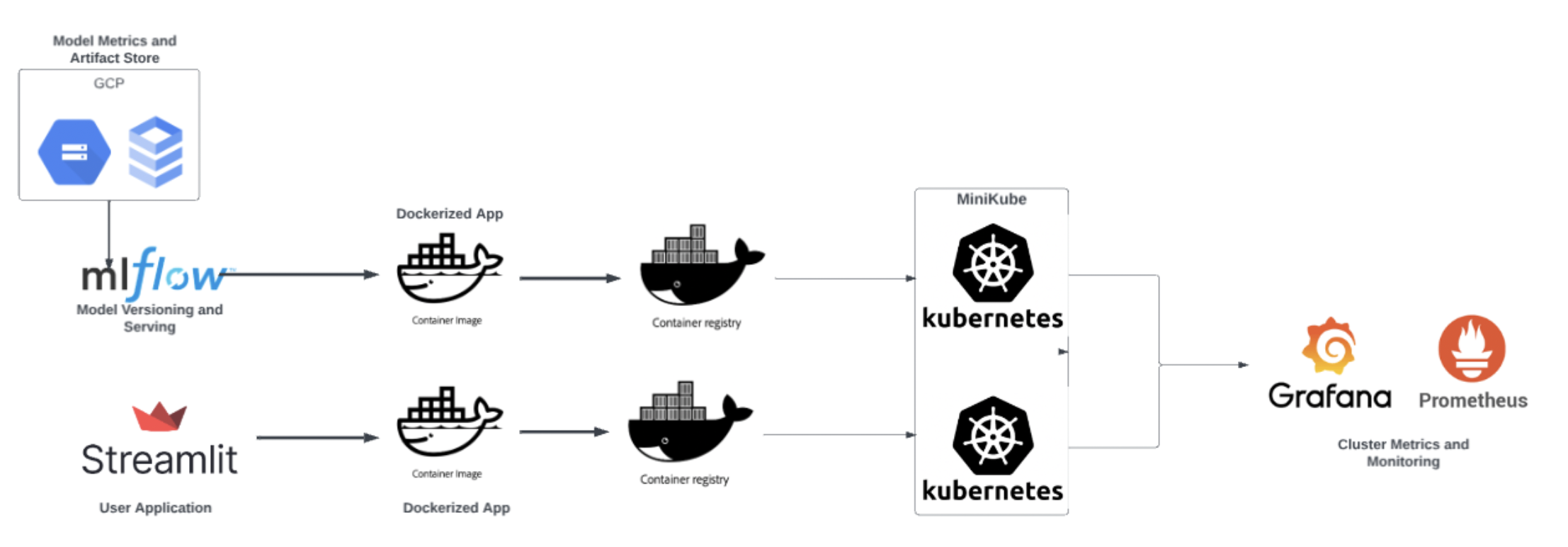

MLOPS Pipeline using Kubernetes [Github]

- Built an MLOps pipeline integrating MLFlow, Kubernetes, Docker, Grafana, Prometheus, PostgreSQL, GCP and Streamlit

- Deployed ML model to K8s hosted MLFlow/Streamlit & PostgreSQL(GCP hosted). Monitored using Grafana/ Prometheus

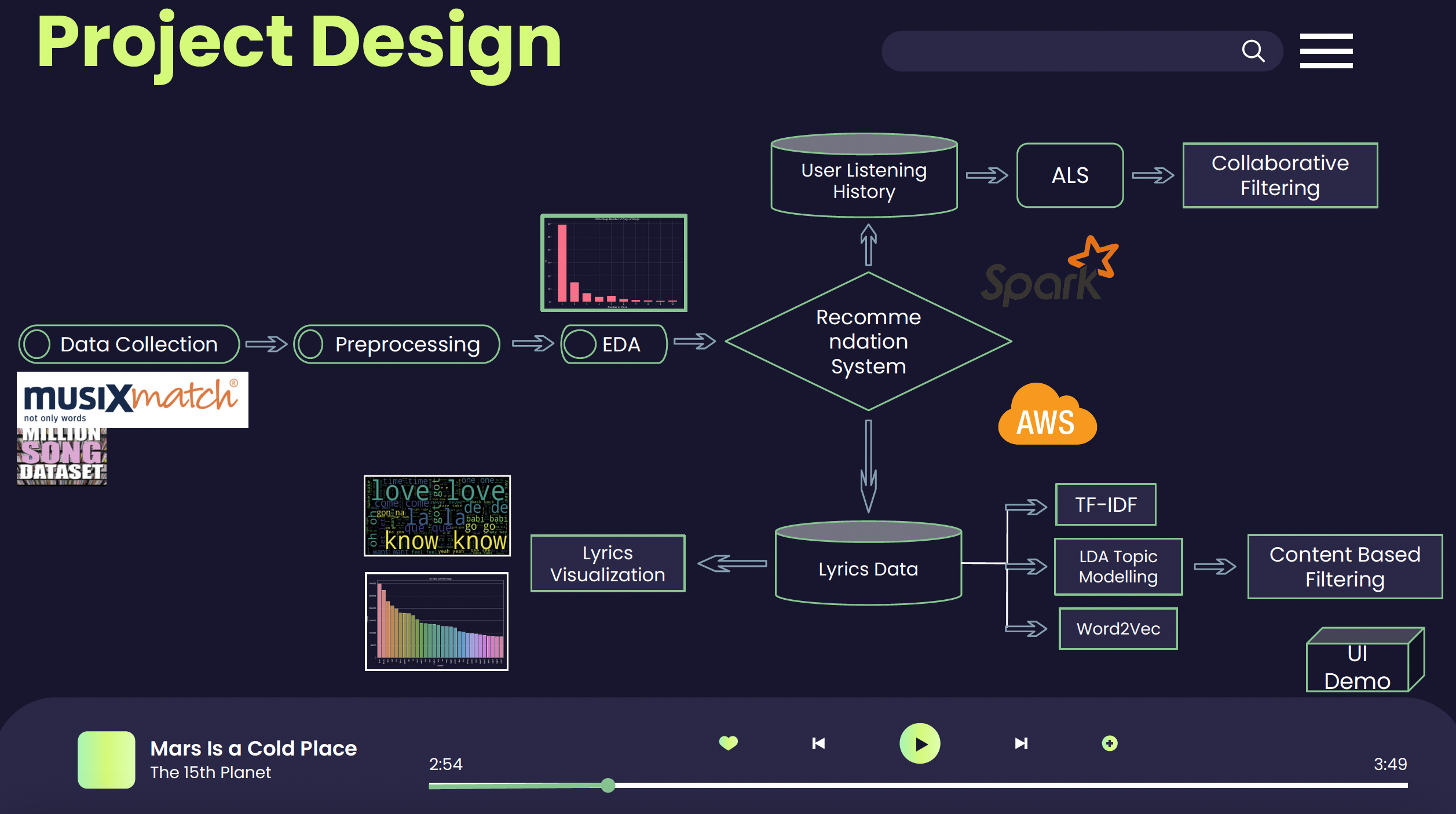

Music Recommendation System at Scale [Github]

- Utilized Collaborative Filtering (ALS), Content-Based Filtering (Word2Vec, TFIDF, LDA), and Locality Sensitive Hashing

- Generated playlist recommendations and integrated Collaborative Filtering (Elephas + Pyspark), achieving low RMSE

Transfer Learning on CIFAR10: Benchmarking Inceptionv2, ResNet50, and VGG19 [Github]

- The project Fine tuned Inceptionv2, ResNet50, and VGG19 pre-trained models on CIFAR10. Inceptionv2 excelled with a 95.44% accuracy and F1 score of 0.9567, signifying the effectiveness of strategic fine-tuning practices such as utilizing techniques like SGD with momentum, low learning rates, gradual unfreezing, discriminative fine-tuning, and learning rate schedule to enhance performance and prevent overfitting.

- Inceptionv2's performance peaked with a training time of just over 4 hours on a T4 GPU, outshining its counterparts with top test accuracy and F1 score. Its architectural complexity was well-suited to the dataset, demonstrating the potential of sophisticated models in transfer learning scenarios.

- ResNet50 displayed a balance of efficiency and accuracy on the T4 GPU, with training times averaging just under 3 hours and achieving a high test accuracy of 94.11% and an F1 score of 0.9415, validating the effectiveness of deep networks with residual learning.

- VGG19, with an extensive parameter set, showed a higher susceptibility to overfitting and a longer training duration of approximately 3 hours and 51 minutes on a T4 GPU, achieving a test accuracy of 93.31% and F1 score of 0.9327. This underscores the importance of model architecture compatibility with the dataset size.

GET IN TOUCH

69 Langley Rd, Brighton

Boston, MA 02135

svyerra@bu.edu